Voluptates voluptatibus omnis qui porro sed

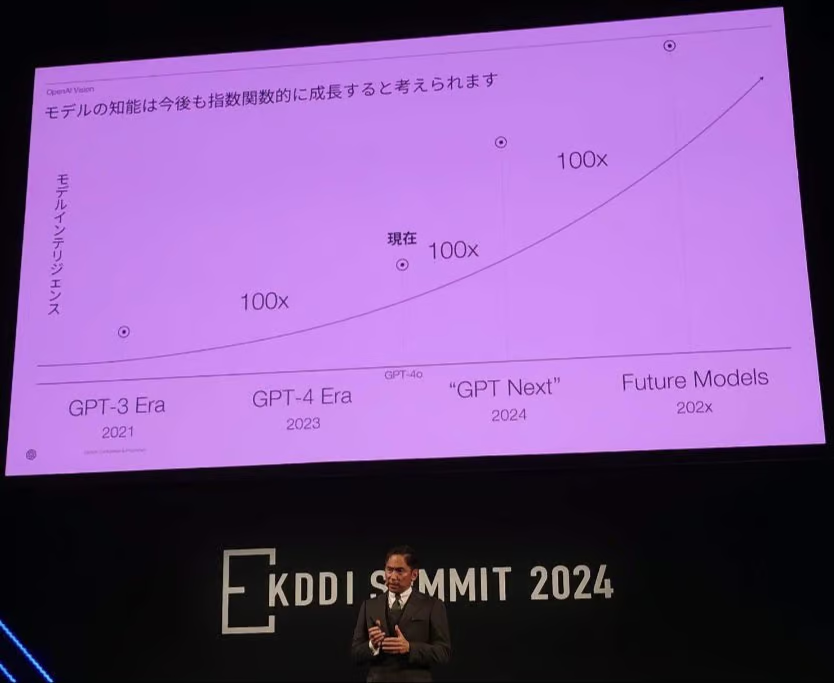

The CEO of OpenAI Japan has announced that the company plans to release “GPT-Next” later this year, boasting a computational load that is 100 times greater than GPT-4. The next-generation model, referred to as “GPT-4 NEXT,” is expected to be trained using a smaller version of OpenAI’s latest training infrastructure, “Strawberry,” but with computational resources equivalent to those used for GPT-4. This immense leap in effective computational load, which also benefits from architectural improvements and enhanced learning efficiency, is expected to significantly increase the model’s capabilities.

Unlike traditional software, AI evolves exponentially, and “GPT-Next” is expected to deliver performance growth nearly 100-fold based on its predecessors. The slide presented by the CEO emphasized that this increase in computational power does not merely reflect scaling in computing resources but rather the combination of improved architecture and enhanced training methods, pushing the boundaries of what AI models can achieve.

Additionally, OpenAI’s “Orion” project, which has gained significant attention, was trained over several months using the equivalent of 10,000 H100 GPUs. This represents a tenfold increase in computational resources compared to GPT-4, adding three orders of magnitude (OOMs) in terms of its performance. Orion is anticipated for release next year, further highlighting the rapid advancements in AI technology expected from OpenAI.